Hitting Streaks Don’t Obey Your Rules: Evidence That Hitting Streaks Aren’t Just By-Products of Random Variation

This article was written by Trent McCotter

This article was published in 2008 Baseball Research Journal

Professional athletes naturally experience hot and cold streaks. However, there’s been a debate going on for some time now as to whether professional athletes experience streaks more frequently than we would expect given the players’ season statistics. This is also known as having “the hot hand.” For example, if a player is a 75 percent free-throw shooter this season and he’s made his last 10 free throw

Professional athletes naturally experience hot and cold streaks. However, there’s been a debate going on for some time now as to whether professional athletes experience streaks more frequently than we would expect given the players’ season statistics. This is also known as having “the hot hand.”

For example, if a player is a 75 percent free-throw shooter this season and he’s made his last 10 free throws in a row, does he still have just a 75 percent chance of making the 11th free throw? The answer from most statisticians would be a resounding Yes, but many casual observers believe that the player is more likely to make the 11th attempt because he’s been “hot” lately and that his success should continue at a higher rate than expected. Two common explanations for why a player may be “hot” are that his confidence is boosted by his recent success or that his muscle memory is better than usual, producing more consistency in his shot or swing.

AS IT RELATES TO BASEBALL

The question is this: Does a player’s performance in one game (a ‘”trial,” if you will) have any predictive power for how he will do in the next game (the next trial)? If a baseball player usually has a 75 percent chance of getting at least one base hit in any given game and he’s gotten a hit in 10 straight games, does he still have a 75 percent chance of getting a hit in the 11th game? This is essentially asking, “Are batters’ games independent from one another?”

As with the free-throw example, most statisticians will say that the batter in fact does still have a 75 percent chance of getting a hit in the next game, regardless of what he did in the last 10. In fact, this assumption has been the basis for several Baseball Research Journal articles in which the authors have attempted to calculate the probabilities of long hitting streaks, usually Joe DiMaggio’s major-league record 56-game streak in 1941. It was this assumption about independence that I wanted to test, especially in those rare cases where a player has a long hitting streak (20 consecutive games or more). These are the cases where the players are usually aware that they’ve got a long streak going.

If it’s true that batters who are in the midst of a long hitting streak will tend to be more likely to continue the streak than they normally would (they’re on a “hot streak”), then we would expect more 20-game hitting streaks to have actually happened than we would theoretically expect to have happened. That is, if players realize they’ve got a long streak going, they may change their behavior (maybe by taking fewer walks or going for more singles as opposed to doubles) to try to extend their streaks; or maybe they really are in an abnormal “hot streak.” But how do we determine what the theoretical number of twenty-game hitting streaks should be?

In the standard method, we start by figuring out the odds of a batter going hitless in a particular game, and then we subtract that value from 1; that will yield a player’s theoretical probability of getting at least one hit in any given game:

1-((1-(AVG))^(AB/G))

For a fabricated player named John Dice who hit .300 in 100 games with 400 at-bats, this number would be:

76 percent chance

of at least one hit

1-((1-(.300))^(400/100)) = .7599 = in any given game

With the help of Retrosheet’s Tom Ruane, I did a study over the 1957–2006 seasons to see how well that formula can predict the number of games in which a player will get a base hit. For example, in the scenario above, we would expect John Dice to get a hit in about 76 of his games; it turns out the formula above is indeed very accurate at predicting a player’s number of games with at least one hit.

Thus, if games really are independent from one another and don’t have predictive power when it comes to long hitting streaks, this means that John Dice’s 100-game season can be seen as a series of 100 tosses of a weighted coin that will come up heads 76 percent of the time; long streaks of heads will represent long streaks of getting a hit in each game. This method for calculating the odds of hitting streaks was used by Michael Freiman in his article “56-Game Hitting Streaks Revisited” in BRJ 31 (2002), and it was also used by the authors of a 2008 op-ed piece in the New York Times:

Think of baseball players’ performances at bat as being like coin tosses. Hitting streaks are like runs of many heads in a row. Suppose a hypothetical player named Joe Coin had a 50–50 chance of getting at least one hit per game, and suppose that he played 154 games during the 1941 season. We could learn something about Coin’s chances of having a 56-game hitting streak in 1941 by flipping a real coin 154 times, recording the series of heads and tails, and observing what his longest streak of heads happened to be.

Our simulations did something very much like this, except instead of a coin, we used random numbers generated by a computer. Also, instead of assuming that a player has a 50 percent chance of hitting successfully in each game, we used baseball statistics to calculate each player’s odds, as determined by his actual batting performance in a given year.

For example, in 1941 Joe DiMaggio had an 81 percent chance of getting at least one hit in each game . . . we simulated a mock version of his 1941 season, using the computer equivalent of a trick coin that comes up heads 81 percent of the time.

— Samuel Arbesman and Steven Strogatz, New York Times, 30 March 2008

But I wondered whether this method has a fundamental problem as it relates to looking at long hitting streaks, because it uses a player’s overall season stats to make inferences about what his season must have looked like on a game-by-game basis.

Think of the example of flipping a coin. That’s about as random as you can get, and we wouldn’t really consider the outcome of your last flip to affect the outcome of your next flip. That means that we can rearrange those heads and tails in any random fashion and the only variation in streaks of heads would be due entirely to random chance. If this were true in the baseball example, it means that we could randomly rearrange a player’s season game log (listing his batting line for each game) and the only variation in the number of long streaks that we would find would be due entirely to random chance.

THE NUMBER-CRUNCHING

To see who’s right about this, we need to solve the problem of how to calculate the theoretical number of hitting streaks we would expect to find. It turns out that the answer actually isn’t too complicated. I took the batting lines of all players for 1957 through 2006 and subtracted out the 0-for-0 batting lines, which neither extend nor break a hitting streak. I ended up with about 2 million batting lines.

Then, with the impressive assistance of Dr. Peter Mucha of the Mathematics Department at the University of North Carolina, I took each player’s game log for each season of his career and sorted the game-by-game stats in a completely random fashion. So this means that, for instance, I’m still looking at John Dice’s .300 average, 100 games, and 400 at-bats—but the order of the games isn’t chronological anymore. It’s completely random. It’s exactly analogous to taking the coin tosses and sorting them randomly over and over to see what long streaks of heads will occur. See the example at the end of this article for a visual version of this.

Dr. Mucha and I ran each random sorting ten thousand separate times, so we ended up sorting every player-season from 1957 through 2006 ten thousand separate times to see what streaks occurred. For each of the 10,000 permutations, we counted how many hitting streaks of each length occurred. The difference between this method and the method that has been employed in the past is that, by using the actual game-by-game stats (sorted randomly for each player), we don’t have to make theoretical guesses about how a player’s hits are distributed throughout the season. Remember, if players’ games were independent from one another, this method of randomly sorting each player’s games should—in the long run—yield the same number of hitting streaks of each length that happened in real life.

Here are the results.

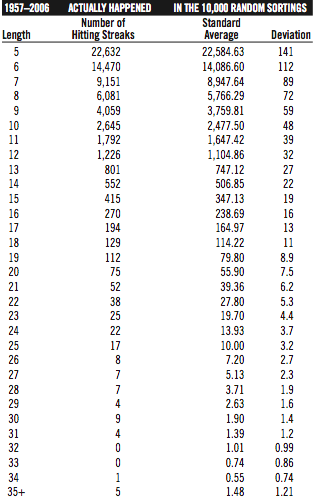

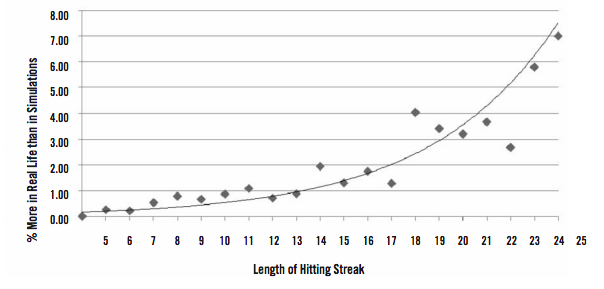

TABLE 1.

TABLE 2.

It’s clear that, for every length of hitting streak of 5-plus games, there have been more streaks in reality than we would expect given players’ game-by-game stats. To give those numbers some meaning: There were 19 single-season hitting streaks of 30-plus games from 1957 through 2006. The ten thousand separate, random sortings of the game-by-game stats produced an average of 7.07 such streaks for 1957–2006. That means that almost three times as many 30-plus-game hitting streaks have occurred in real life as we would have expected.

Since there were 10,000 trials for our permutation, the numbers here are all highly significant. For instance, the average number of 5-plus-game streaks in the permutations was about 62,766, with a standard deviation of about 151, and there were 64,803 such streaks in real life from 1957 through 2006. This means that the real-life total was 13.5 deviations away from the expected mean, which implies that the odds of getting these numbers simply by chance are about one in 150 duodecillion (150 followed by 39 zeros). The number of hitting streaks that have really happened is significantly much higher than we would expect if long hitting streaks could in fact be predicted using the coin-flip model. Additionally, the results of the 10,000 trials converged, which means that the first 5,000 trials had almost the exact same averages and standard deviations as did the second 5,000 trials.

But what does this all mean? What it seems to indicate is that many of the attempts to calculate the probabilities of long hitting streaks are actually underestimating the true odds that such streaks will occur. Additionally, if hits are not IID (independent and identically distributed) events, then it may be extremely difficult to devise a way to calculate probabilities that do produce more accurate numbers.

SO WHY DON’T THE PERMUTATIONS MATCH THE REAL-LIFE NUMBERS?

It’s easier to begin by debunking several commonsense explanations as to why the permutations didn’t produce a similar number of hitting streaks as happened in real life.

The first one I thought of was the quality of the opposing pitching. If a batter faces a bad pitching staff, he’d naturally be more likely to start or continue a hitting streak, relative to his overall season numbers. But the problem with this explanation is that it’s too short-sided; you can’t face bad pitching for too long without it noticeably increasing your numbers, plus you can’t play twenty games in a row against bad pitching staffs, which is what would be required to put together a long streak. This same reasoning is why playing at a hitter-friendly stadium doesn’t seem to work either, since these effects don’t continue for the necessary several weeks in a row. In other words, the explanation must be something that lasts longer than a four-game road trip to Coors Field or getting to face Jose Lima twice in one month.

The second possible explanation— one that I really thought could explain everything—was the weather. That is, it’s commonly believed that hitting increases during the warm summer months, which would naturally make long hitting streaks more likely, while the cooler weather at the beginning and the end of the season makes streaks less likely. This would explain why long streaks seem to happen so much more often than we’d expect; the warmest period of the summer can last for months, seemingly making it fertile ground to start a hitting streak. The reason this is important is that hitting streaks are exponential. That is, a player who hits .300 for two months will be less likely to have a hitting streak than a player who hits .200 one month and .400 the next; the two players would have the exact same numbers, but hitting streaks tend to highly favor batters who are hitting very well, even if it’s just for a short period, and even if it’s counterbalanced by a period of poor hitting.

The problem with the weather explanation is that the stats don’t bear it out. Of the 274 streaks of 20-plus games from 1957 through 2006, there were just as many that began in May as began in June, July, or August. If it were true that the hottest months spawned hitting streaks, we would see a spike in streaks that began in those months. We don’t see that spike at all.

So that eliminates the explanations that would seem to be the most likely. Remember, if all of the assumptions about independence were right, we wouldn’t even have these differences between the expected and actual number of streaks in the first place; so it’s yet another big surprise that our top explanations for these discrepancies also don’t seem to pan out. This leaves me with two other possible explanations, each of which may involve psychology more than mathematics.

FIRST EXPLANATION

Maybe the players who have long streaks going will change their approach at the plate and go for fewer walks and more singles to keep their hitting streaks going. This same idea is covered in The Bill James Goldmine, where James discusses how pitchers will make an extra effort to reach their 20th victory of a season, which results in there being more 20-game winners in the majors than 19-game winners. There is evidence of this effect, too, as seen by graph 1, which visualizes the chart from earlier.

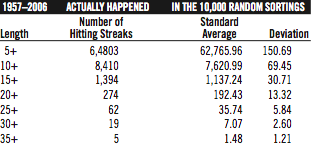

GRAPH 1: Real Life vs. Random Permutation

Notice how the number of streaks around 25 games, and especially around 30 games, spikes up, relative to the general decreasing trend of longer hitting streaks. These streaks are pretty rare, so we’re dealing with small samples, but this helps show that hitters may really be paying attention to their streaks (especially their length), which lends a lot of credibility to the idea that hitters may change their behavior to keep their streaks going.

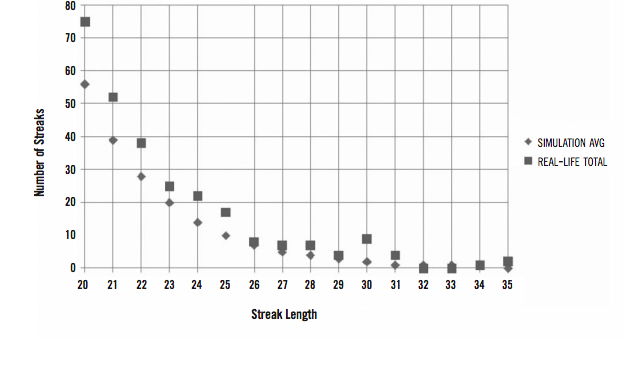

Also lending some credibility to this explanation is that the spread (the difference between how many streaks really happened and how many we expected to happen) seems to increase as the length of the streak increases. That is, there have been about 7 percent more hitting streaks of 10 games than we would expect, but there have been 20 percent more streaks of 15 games, and there have been 80 percent more streaks of 25 games. Perhaps, as a streak gets longer, a batter will become more focused on it, thinking about it during every at-bat, doing anything to keep in the batting order so that he gets more plate appearances. There may also be a self-fulfilling prophecy here; as a player starts hitting well, his team will tend to score more runs, which will give the batter more plate appearances. So hitting well lends itself to getting more chances to extend a hitting streak. Also, pitchers may be hesitant to walk batters (and batters hesitant to take walks) because the players want the streak to end “legitimately,” with the batter being given several opportunities to extend the streak. The extra at-bats per game also account for the slope of graph 2, which shows an exponential trend in the number of “extra” hitting streaks that have occurred in real life as opposed to permutations.

GRAPH 2. Real Life vs. Random Permutation

As streak length increases, those extra at-bats make streaks increasingly more likely. For instance, if we take a .350 hitter who plays 150 games and increase his at-bats per game from 4.0 to 4.28 (about a 6.9 percent increase) for an entire season, his odds of a 20-game hitting streak increase by 34 percent, but his odds of a 30-game streak increase by 81 percent, and his odds of a 56-game streak increase by an amazing 244 percent. Keep in mind that those increases are larger than we would see in our hitting-streak data because the 6.9 percent increase in it going. See graph 2 for a representation of how, as streak length increases, there have been more such streaks in real life than we averaged in the random permutations.

The evidence for this is that 85 percent of the players who had 20-plus-game hitting streaks from 1957 through 2006 had more at-bats per game during their hitting streak than they had for their season as a whole. Overall, it worked out to an average 6.9 percent increase in at-bats per game during their streak. That extra 6.9 percent of at-bats per game almost certainly accounts for a portion of the “extra” hitting streaks that have occurred in real life as opposed to our permutations.

This increase in at-bats per game during a streak makes sense, as batters are much less likely to be used as a pinch-hitter or be taken out of a game early when they have a hitting streak going. Additionally, when a player is hitting well, his manager is more likely to keep him in the starting lineup or even move him up at-bats per game applies only to the 20 or so games during the hitting streak—not the entire 150 games that a batter plays during a season. It is difficult to determine how many more streaks we would see if hitters’ at-bats were allowed to increase by 6.9 percent for only selected stretches of their season.

SECOND EXPLANATION

Something else is going on that is significantly increasing the chances of long streaks, including possibly the idea that hitters do experience a hot-hand effect where they become more likely to have a hitting streak because they are in a period in which they continually hit better than their overall numbers suggest. This hot streak may happen at almost any point during a season, so we don’t see a spike in streaks during certain parts of the year.

At first glance, the results of a hot hand would appear very similar to the hot-weather effect: If you’ve been hitting well lately, it’s likely to continue, and if you haven’t been hitting well lately, that’s likely to continue as well. The difference is this: If it’s the weather that’s the lurking variable, then you continue hitting well because you naturally hit better during this time of year. If it’s a hot-hand effect, then you continue hitting well because you’re on a true hot streak. But we have seemingly shown that the weather doesn’t have an effect on hitting streaks, thereby providing some credibility for the hot-hand idea.

We expect a player to have a certain amount of hot and cold streaks during any season, but the hot-hand effect says that the player will have hotter hots and cooler colds than we’d expect. So the player’s overall totals still balance out, but his performance is more volatile than we would expect using the standard coin-flip model.

There may be some additional evidence for this. Over the period 1957–2006, there were about 7 percent more 3-and 4-hit games in real life than we would expect given the coin-flip model but also about 7 percent more hitless games. Over a course of 50 years, those percentages really add up. What this means is that the overall numbers still balance out over the course of a season, but we’re getting more “hot games” than we would expect, which is being balanced by more “cold games” than we would expect.

Additionally, there is evidence that tends to favor the hot-hand approach over the varying-at-bat approach. Dr. Mucha and I ran a second permutation of 10,000 trials that was the same as the first permutation—except we eliminated all the games where the batter did not start the game. In our first permutation, we implicitly assumed that non-starts are randomly sprinkled throughout the season. But that is likely not the case. Batters will tend to have their non-starts clustered together, usually when they return from an injury and are used as a pinch-hitter, when they have lost playing time and are used as a defensive replacement, or when they are used sparingly as the season draws to a close.

We expected that this second permutation would contain more streaks than the first permutation, as we essentially eliminated a lot of low-at-bat games, which are much more likely to end a hitting streak prematurely. The question was whether this second permutation would contain roughly the same number of streaks as occurred in real life.

The outcome actually comported very well with our expectations. In general, there were more streaks in this second permutation than in the first permutation—but still fewer streaks than there were in real life. For instance, in real life for 1957–2006, there were 274 streaks of 20 or more games; the first permutation (including non-starts) had an average of a mere 192 such streaks; and the second permutation (leaving out non-starts) had an average of 259 such streaks. The difference between 259 and 274 may not sound like much, but it is still very significant when viewed over 10,000 permutations, especially since we still aren’t quite comparing apples to apples. There undoubtedly will be streaks that fall just short of 20 games when looking only at starts but that would go to 20 or more games when non-starts (e.g., successful pinch-hitting appearances) are included.

As the streak length increases, the difference between real life and the two permutations widens even further. For streaks of 30 or more games, there were 19 in real life, with an average of only 10 in our second permutation when we look only at starts. In this paper I deal primarily with long streaks, but I will point out that, for streaks less than 15 games, the pattern does not hold; there were fewer short streaks in real life than in the second permutation when we look only at starts.

The reason this favors the hot-hand effect is this: Our first explanation above relies on the idea that players are getting significantly more at-bats per game during their hitting streak than during the season as a whole. But the reason for a large part of that difference is that players are not frequently used as non-starters (e.g., pinch-hitters) during their streak, so it artificially inflates the number of at-bats per game that the batters get during their streaks relative to their season as a whole. Pinch-hitting appearances have little effect on real-life hitting streaks because managers are hesitant to use a batter solely as a pinch-hitter if he is hitting well. So we should be able to remove the pinch-hitting appearances from our permutations and get results that closely mirror real life. But when we do that, we still get the result that there have been significantly more hitting streaks in real life than there “should have been.” This tends to add some weight to the hot-hand effect, since it just does not match up with what we would expect if the varying number of at-bats per game were the true cause.

Besides the hot-hand effect, other conditions that may be immeasurable could be playing a part. For instance, scorers may be more generous to hitters who have a long streak going, hating to see a streak broken because of a borderline call on a play that could reasonably have been ruled a base hit.

CONCLUSION

If you take away only one thing from this article, it should be this: This study seems to provide some strong evidence that players’ games are not independent, identically distributed trials, as statisticians have assumed all these years, and it may even provide evidence that things like hot hands are a part of baseball streaks. It will likely take even more study to determine whether it’s hot hands, or the change in behavior driven by the incentive to keep a streak going, or some other cause that really explains why batters put together more hitting streaks than they should have, given their actual game-by-game stats. Given the results, it’s highly likely that the explanation is some combination of all of these factors.

The idea that hitting streaks really could be the by-product of having the hot hand is intriguing. It will tend to chafe statisticians, who rely on that key assumption of independent, identically distributed trials in order to calculate probabilities. When we remove the non-starts that could have thrown a wrench into our first permutations—but we still get the same results—then it really does lend some evidence for the possibility that what has happened in real life just does not match what a “random walk” would look like.

From the overwhelming evidence of the permutations, it appears that, when the same math formulas used for coin tosses are used for hitting streaks, the probabilities they yield are incorrect; those formulas incorrectly assume that the games in which a batter gets a hit are distributed randomly throughout his season. This also means that maybe all those baseball purists have had it at least partially right all this time; maybe batters really do experience periods where their hitting is above and beyond what would be statistically expected given their usual performance.

In his review of Michael Seidel’s book Streak, Harvard biologist Stephen Jay Gould wrote:

Everybody knows about hot hands. The only problem is that no such phenomenon exists. The Stanford psychologist Amos Tversky studied every basket made by the Philadelphia 76ers for more than a season. He found, first of all, that probabilities of making a second basket did not rise following a successful shot. Moreover, the number of “runs,” or baskets in succession, was no greater than what a standard random, or coin-tossing, model would predict.

Gould’s point is that hitting streaks are analogous to the runs of baskets by the 76ers in that neither should show any signs of deviating from a random coin-tossing model. I hate to disagree with a Harvard man, but my study of long hitting streaks for 1957 through 2006 seems to show that the actual number of long hitting streaks are in fact not the same as what a coin-tossing model would produce, even when we try to account for the fact that players get varying numbers of at-bats per game. By using the coin-flip model all of these years, we have been underestimating the likelihood that a player will put together a 20-, 30-, or even a magical 56-game hitting streak.

But this study doesn’t just look at the statistics side of baseball. It also reveals the psychology of it. This study shows that sometimes batters really may have a hot hand, or at least that they adapt their approach to try to keep a long hitting streak going—and baseball players are nothing if not adapters.

COIN-FLIP EXAMPLE

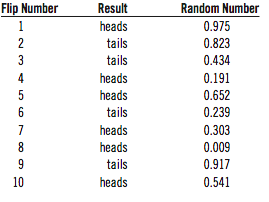

I flipped a coin ten times and wrote down the result. I then had my computer give me a random number that is somewhere between 0 and 1, and I assigned that number to each coin flip:

TABLE 3.

Now, I take those results above and sort them by that random number instead:We can consider the table 3 to be like John Dice’s batting log. Each game with a “heads” is a game where he got a hit. Each game with a “tails” is one in which he went hitless. The longest streak of heads was two in a row.

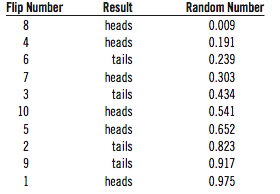

TABLE 4.

It’s still the same outcome as before, except that they’ve just been reordered completely randomly. Our longest streak of heads here is two in a row, as well. It just so happens that we end up with the same longest streak of heads in this random sorting as we did in the original tossing. But now that the results are sorted randomly, any variation in the streaks we find will be due completely to chance.

For coin tosses, we expect to find about the same number of long streaks from one trial to the next. And if hitters’ results were like coin tosses, we would expect to find about the same number of long hitting streaks from one trial to the next. But my results show that the original order of baseball games (analogous to the first table of coin flips) is significantly more likely to contain long hitting streaks than the random order of baseball games (analogous to the second table of coin flips).

TRENT MCCOTTER is a law student at the University of North Carolina at Chapel Hill.

ACKNOWLEDGMENTS

Peter Mucha of the Mathematics Department at the University of North Carolina deserves major applause for his great willingness to review my article and especially for writing the code that would randomly permute fifty years’ worth of information a mind-boggling 10,000 times—and then doing it again for our second permutation. Had I done that same work using my original method, it would have taken me about 55 days of nonstop number-crunching. Additionally, Dr. Mucha’s efforts on my project were supported in part by the National Science Foundation (award number DMS-0645369). Pete Palmer also deserves a hand for his willingness to compile fifty years of data that was essential to running my second permutation. I would be remiss if I didn’t thank all of the volunteers who do work for Retrosheet, whose data made up 100 percent of the information I used in this study; Tom Ruane deserves credit for using Retrosheet data to compile several important files that contained hard-to-find information that I needed for this study. I would also like to thank Chuck Rosciam for reviewing my article, Dr. Alan Reifman of Texas Tech (who runs The Hot Hand in Sports blog at thehothand.blogspot.com) for reading through a preliminary copy of the article, and especially Steve Strogatz and Sam Arbesman of Cornell for offering incredible insight on this topic, for sharing their research with me, and for letting me borrow part of their New York Times article.