SABR, Baseball Statistics, and Computing: The Last Forty Years

This article was written by Richard Schell

This article was published in Fall 2011 Baseball Research Journal

In 1971, the year SABR was founded, the analysis of baseball statistics was still in its infancy, and computers were in the hands of few. Sabermetrics developed alongside the information age, with personal computers enabling those who did not work where computers were easily available to develop their algorithms and analyze data at home. In a recent column in the New York Times, Sean Forman dissected Ryan Howard’s effectiveness as a cleanup hitter. He cited Howard’s OPS, his WAR rating, and suggested that several other sabermetric measures were similar. He went on to analyze why, given relatively low ratings on each of these stats, Howard was still among the top cleanup hitters in RBIs. Forman looked at the performance of the Phillies’ hitters based on OBP as well as percentage of extra base hits.

Forty years ago, Forman’s column could not have been written. At the time, the analytic techniques did not exist, the data were inaccessible, and the computing power unavailable.

In 1971, the year the Society for American Baseball Research was founded, the analysis of baseball statistics was still in its infancy, and computers were in the hands of few. Computers were expensive to buy, difficult to use, and most were used primarily as scientific instruments and business machines dedicated to single tasks. In that year, Intel introduced the 4004 microprocessor and went into volume production with the first viable semiconductor memory chip, the 1103, and IBM developed the 8-inch floppy drive. All these were precursors to the personal computers that would appear during the next decade. In 1971, the Internet did not exist; its predecessor, ARPANET, linked 23 computers together at universities, private research centers, and government agencies.

In 1971, those interested in doing statistical analysis to study the game of baseball already existed, but since SABR had just been created, the term sabermetrician hadn’t yet been coined. They often worked where computers were available as part of their work or research. Pete Palmer accessed an IBM mainframe in the early ’70s and kept his database on punch cards. In even older times, researchers like George Lindsey and Earnshaw Cook used “tabulators”—fancy adding machines to perform the necessary calculations to draw conclusions—and kept large paper-based accounts of baseball games to help them use these machines to do their work.[fn]According to Alan Schwarz, Lindsey spent hundreds (it could have been thousands) of hours to answer simple questions about baseball strategies. As Schwarz says, “…obviously, he had no computer to handle the computation.” Schwarz also reported that Cook used a sliderule and a Frieden calculator. The Numbers Game, 72 and 77.[/fn]

Since 1971, progress in both fields has accelerated greatly, with information technology making possible analysis that wasn’t feasible 40 years ago. Sabermetrics developed alongside the information age, with personal computers enabling those who did not work where computers were easily available to develop their algorithms and analyze data at home. The Internet spawned websites and blogs, connecting people and enabling them to gather and store vast amounts of data. Sabermetrics has gone from something a passionate few studied to something millions access and understand. These days articles in Sports Illustrated talk about zone ratings; Murray Chass and Alan Schwarz have sliced and diced data for columns in the New York Times; Rob Neyer uses statistics and stats jargon extensively in his work for SB Nation. Millions of readers were able to enjoy and understand Sean Forman’s piece on Ryan Howard in the Times.

INCEPTION: THE 1970S

Dick Cramer, an early pioneer in baseball statistical analysis, was fortunate to have access to an IBM computer as a graduate student. He got hooked on programming when he worked with a baseball simulation program. He later wrote his own simulation program for the PDP-1 at the Harvard Research Center. It was during this time that he developed the concept of base-run average, which he later published with Pete Palmer. By 1971, Cramer had left Harvard and no longer had access to a “real computer,” so he used the HP-67 desktop calculator to continue his work. At roughly the same time, Pete Palmer was using an IBM 1800 available to him at his workplace. He amassed a large database, stored on punchcards which took up thirty drawers in a card file cabinet. He later moved to a CDC 6600 and moved his database to tape storage. It was on these machines that he began to develop his models for run scoring. Palmer met Cramer through SABR, and throughout the early seventies, they collaborated on seminal articles in the Baseball Research Journal, starting in 1974.[fn]Palmer introduced such fundamental concepts as On-base average (1973), home park advantage (1978), and with Cramer base-run percentage (1974).[/fn] According to Cramer, they exchanged typewritten letters through the postal service in the process of developing their manuscripts. Later, their collaboration would lead to Cramer’s involvement with STATS, Inc. Cramer and Palmer were not alone in their work for long. Bill James published an article in the 1977 Baseball Research Journal, the same year he published his first Abstract.[fn]The Bill James Baseball Abstract, 1977.[/fn]

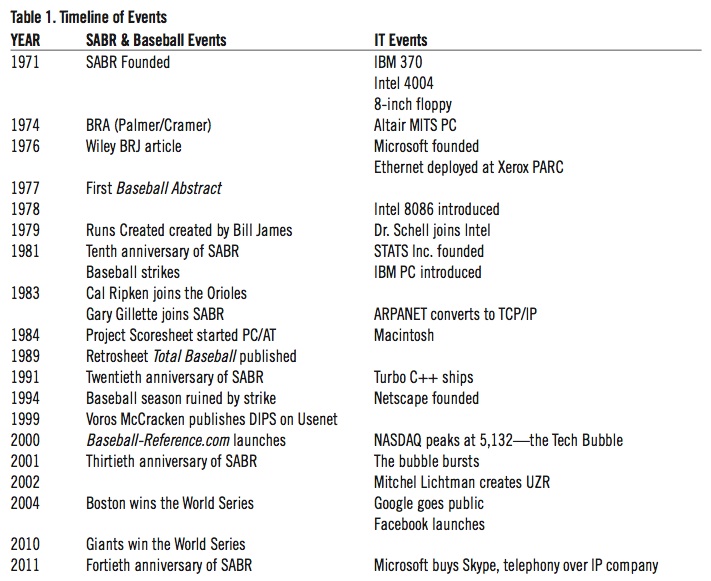

Table 1. Timeline of SABR/Baseball and IT Events

(Click image to enlarge.)

Cramer and Palmer relied on access to workplace computers, as would many of their successors. In 1971, the idea of a “personal” computer was too far-fetched, except for a few computer researchers, to even consider. Two months after SABR was founded, one of the signal events in the history of computing occurred, the introduction of the Intel 4004. The 4004 was the first single-chip CPU available as a commercial product.[fn]The 4004 was developed by a team of Intel engineers. Ted Hoff, the first Intel fellow, was responsible for the concept, Stan Mazor for designing the instruction set. Federico Faggin did the logic and circuit design for the chip, with the assistance of Masahito Shima, who was on loan from the first customer for the chip, Busicom.[/fn] The 4004 proved the feasibility of developing a small computer system based entirely on a technology that could be mass-produced.[fn]The MOSFET (metal-oxide semiconductor/field-effect transistor) was the technology Intel was created to commercialize. It would take over the market from other semiconductor companies like Fairchild and Texas Instruments. The 4004 as well as the surrounding memory chips were MOS integrated circuits.[/fn] Intel followed the 4004 quickly with the 8008, an 8-bit microprocessor that enabled a variety of new applications. These processors led to the first commercially available[fn]Xerox is often attributed with the creation of the first true personal computer, the Alto, at their Palo Alto Research Center (PARC). These were not the first desktop computers; HP and Wang had both developed programmable desktop calculators that executed stored programs. Dick Cramer, as well as the author, used such machines.[/fn] personal computers, primitive as they were. One of these was a kit, the MITS Altair 8800,[fn]The Altair was the inspiration for Bill Gates and Paul Allen to form Microsoft, to develop software for the new generation of computer.[/fn] first available in 1975, and used principally by hobbyists, the same sort of people who in an earlier era built Heathkit stereos. The Altair is long gone, but it led to the creation of Microsoft; founders Bill Gates and Paul Allen built the first BASIC[fn]BASIC was widely used as a programming language for various forms of personal computer.[/fn] interpreter for the machine.

Computers were not essential for conducting all research. For simple analysis, a hand-calculator and statistics available from Major League Baseball were sufficient. As Alan Schwarz details in his history of baseball statistics, The Numbers Game, people were counting up stats and working with them on paper from the game’s earliest days. However, for some deeper analysis the ability to process large amounts of data quickly was needed. Statistical analysis such as linear regression required significant computing power (at least in the day). The 1976 Baseball Research Journal published an article by George T. Wiley[fn]Wiley was an outstanding undergraduate athlete at Oberlin College. He went on to get a PhD and taught history at the University of Pennsylvania, where he was when he wrote his paper for The Baseball Research Journal. He was inducted into the Oberlin Athletics Hall of Fame in 1998.[/fn] entitled “Computers in Baseball Analysis.” Wiley starts his article with this emphatic statement:

The most significant development in the use of statistics over the past 25 years has been with computers. Mathematical computations that formerly took hours to do by hand are completed by the computer in seconds. Masses of statistical information are now being analyzed in ways never before thought possible

Wiley’s research concerned a linear regression model in multiple dimensions that related numerous baseball statistics to a team’s ability to win. He analyzed 640 different teams, and correlated 17 different pieces of information to the final standings of those 640 teams. Although today that analysis would be a relatively trivial undertaking, the limits of computing resources forced Wiley to limit his study to the years 1920 through 1959, less than half the games played at the time the article was written.

When Wiley wrote his article, even the machines in use at research institutions and corporations were relatively limited by today’s standards. For example, the IBM 370 model 158 computed at 1 MIP, had 87 MB of disk capacity—or about 2 percent of a cell-phone’s computing power and storage. When it was introduced in 1971, its primary data input was through punch-cards—the IBM 3270 data entry terminal (the most popular data entry terminal in its day) was still one year away.[fn]The IBM 2260 terminal, which was a punch-card-emulation system, was introduced in 1964. It had the ability to transmit punch-card images electronically without producing the actual cards. In some respects, the 3270 was a major leap forward.[/fn], [fn]Mark Andreesen joked in the early days of the Internet browser that the earliest browsers were a return to the 3270. Only with the introduction of multimedia capabilities to the browser was this analogy broken, something that Tim Berners-Lee did not anticipate when he invented the World Wide Web. (Private conversation.)[/fn]

Another event in 1976 would help change the state of computing: Intel started development of the 8086 16-bit microprocessor,[fn]For an early history of the Intel microprocessors, see Intel Microprocessors: 8008 to 8086 by Stephen P. Morse/Bruce W. Ravenel/Stanley Mazor/William B. Pohlman. Dr. Morse was the architect of the 8086 and is still active on the Internet. See stevemore.org for an online copy of this article.[/fn] whose “little brother,” the 8088[fn]The 8088 used the same instruction set, but was able to directly interface to 8-bit peripherals widely available in the market. This fact made the IBM PC cheaper to build and hence more economically viable than a fully 16-bit computer.[/fn] was the heart of the first IBM PC. It would take another five years before the IBM PC was introduced, but during this time, other widely usable personal computers entered the market and were in use by many of the early sabermetricians. Other significant developments occurred during the 1970s that would benefit analysts in later years. The SQL database access method (and database language) was invented at IBM in 1974. It would be the progenitor for practical databases developed in the 1980s and 1990s.

THE PC ERA: THE 1980S

By 1980, Dick Cramer had switched to using an Apple II, an early personal computer useful in schools and homes. In 1981, Cramer helped form STATS, Inc., and did the bulk of the programming for that venture on his Apple II in UCSD Pascal. By that time, Bill James had published numerous versions of his Baseball Abstract, and Pete Palmer had met John Thorn, with whom he would develop Total Baseball using the database that he had created on cards years earlier.

The personal computer had already become a useful tool by 1980, but the introduction of the IBM PC in 1981 changed the nature of the computer business. It also changed sabermetrics. As Gary Gillette explained to the author,[fn]Private conversation, May 5, 2011.[/fn] “What the PC allowed was a democratization of baseball statistical analysis.” This didn’t happen immediately, as most uses of the IBM PC were in business; home users still used Apple IIs and Commodore 64s. However, as PC clones appeared, prices came down.

The PC enabled non-computer professionals to develop programs using programming languages like BASIC (which Pete Palmer used to develop BAC-Ball for the Philadelphia Phillies and Atlanta Braves), and desktop database tools such as dBASE III and Paradox. Gillette used both when he was running Project Scoresheet. Tom Tippett, David Nichols, and David Smith wrote the input and analysis code for the spreadsheet using Borland’s Turbo C.[fn]InfoWorld, April 2, 1990, 43.[/fn] They later wrote code for Baseball Advanced Media using Turbo C and C++.[fn]Turbo C, Turbo C++, and Paradox were all products developed by Borland International. The author led the development team that produced several versions of Turbo C++.[/fn] (Tippett went on to become director of baseball information services for the Boston Red Sox.) According to Gillette, Bill James, who started Project Scoresheet, opposed computerizing the data, perhaps for reasons similar to those that motivated his opposition to using PC’s in the dugout.

Computing power during the PC Era progressed from tens of thousands of instructions per second to many millions. As a point of reference, in the Infoworld article,[fn]Infoworld, April 2, 1990[/fn] Pete Palmer provided an example of searching 10,000 data in 15 seconds on a PC/AT. The AT processor was capable of 0.9 MIPS in the then current release. By the end of the ’90s, Intel Pentium III-based processors were 2,000 times as fast.[fn]Today’s extreme Intel microprocessors are theoretically capable of attaining nearly 150,000 MIPS, for applications tuned to their particular architectures.[/fn] (The author’s laptop computer uses an Intel microprocessor that exceeds 2,000 MIPS.) That kind of processing power enables sophisticated modeling, such as the Markov models that Mark Pankin, Tom Tango, and others use extensively in their analyses.[fn]Markov models rely on the manipulation of matrices, special kinds of tables of data. Matrix multiplication, inversion, and other operations are facilitated by high-speed computers.[/fn], [fn]Mark Pankin says that prior to 2000, there were limitations to what he could easily do on a PC, but that since then, he has been able to create his models with relative ease, usually only needing Microsoft Excel to do his work. The author has had the same experience.[/fn]

Desktop publishing enabled researchers to combine their numerical data and the written word and to easily cast it into a form that could be made into articles and books. All it took was a word processor and access to a spreadsheet and/or a database. Nonetheless, sharing information generally required sharing diskettes (or the printed word). Versions of the Total Baseball database were made available on diskettes in the late 1980s and early 1990s, for example. Into the early nineties, many corporations lacked local area networks. Communication among computers, even for small groups of people working together, was difficult at best.[fn]The STATS team of Palmer, Cramer, and Jay Bennett attempted to connect an Apple II with a PDP-10 to facilitate their work. With low speed connections and interface incompatibilities, this proved frustrating, according to Cramer.[/fn] Bulletin board systems (BBS) were just coming into popularity, and AOL was one of the few online services available. That was to change shortly, and the changes would impact tremendously the way information and ideas would be shared.

THE EMERGENCE OF THE WORLD WIDE WEB: 1991-2000

The next significant event in computing, and one that has had the most impact on not only baseball research, but our entire way of life, was the emergence of the Internet and World Wide Web. It has led to the creation of significant resources for research and analysis in every field—and it allows us all to conduct most everyday aspects of our lives online, too.

The Internet started as a loose collection of smaller networks (hence the name) in the mid-to-late 1980s.[fn]The proliferation of networks started with NSFNET, a sort of clone of the second generation ARPANET used by NSF researchers, opening up connections to small commercial networks. The widespread adoption of the TCP/IP protocol (see the next note) was critical to this occurring.[/fn] It remained primarily a research tool, except for a few technology companies and government contractors who connected to it to exchange information. Among the earliest methods of information exchange was email. We can’t take email for granted. Before the ability to swamp one another with daily missives became commonplace, exchanges of statistical information were usually done with typewritten (occasionally printed) pages, sent via snail mail. Bill James remarked in an interview for volume 14 of The Baseball Research Journal that he had received a letter with important information that had led him in a different direction, and then commented that because people are in communication with one another, it is possible to make adjustments.[fn]Jay Feldman, in A Conversation with Bill James, The Baseball Research Journal 14, 26.[/fn] That is very true today, and email was just the first important step forward in increasing the frequency and detail of those communications. Even SABR uses email extensively in communicating with members, both at the national and regional levels, as a way to effectively communicate in a timely fashion—something SABR’s paper-printed newsletters often did not do.

Groups of users exchanged information on Usenet, a kind of global bulletin board system, which was a precursor to a number of social media phenomena that have occurred in the last decade. Usenet was home to a few hardcore analysts, such as Voros McCracken. McCracken published his seminal, and sometimes controversial, work on pitching stats on Usenet, but became widely known when Bill James later highlighted his work. Usenet facilitated exchanges of information, much like modern blogs, but it was still inaccessible to nearly everyone, as only 1 in 10 Americans had access to the Internet in the early 1990s, and only 1 in 10 of them accessed Usenet.

The next step forward in the Internet was the creation of the World Wide Web[fn]The Web, as well as email, newsgroups, streaming media, and other things available through the Internet, are applications. The Internet protocols provide a platform for those. Many people conflate the terms Web and Internet, which is technically incorrect.[/fn] in 1991. The Web was conceived of as a way to interchange not just messages, but documents. Its creation was accompanied by the creation of a new application, the web browser. The Web browser was made popular among universities and research institutions by the NCSA Mosaic browser.[fn]Tim Berners-Lee at CERN wrote the first browser, WorldWideWeb, for the NeXT computer in 1990.[/fn] Developers of that browser left the University of Illinois and independently developed the first commercial Web browser at Netscape Communications; it was released in beta late in 1994. The following year, accompanying the initial commercial shipments and the integration of the Internet’s key communication methods (called protocols[fn]The backbone of the Internet and WWW are the twin protocols, TCP and IP, generally abbreviated to TCP/IP. TCP/IP replaced an earlier ARPANET protocol in 1983. Prior to 1985, it was very difficult, if not impossible, for average users to use TCP/IP on their computers; even computer-savvy individuals had to exert considerable effort to get TCP/IP working on a PC. Windows 95 made it easy, helping usher in the Web.[/fn]) into Microsoft’s Windows 95, the Web blossomed into a true consumer phenomenon.

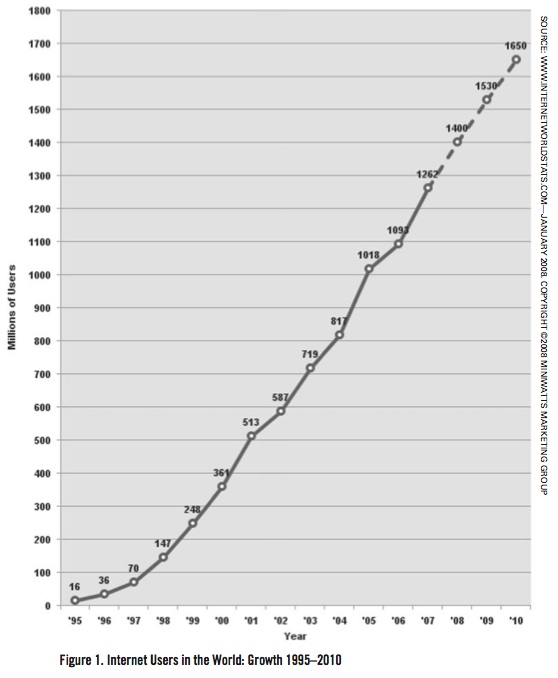

Table 2. Internet Users in the World.

(Click image to enlarge.)

It took but a few years before the Web started to heavily influence baseball research. In 1995, Sean Lahman made his baseball database available over the Internet. Tom Tango credits the Lahman database with a major increase in his productivity.[fn]Email communication.[/fn] Another significant event was the creation of the Retrosheet website in 1996. Prior to 1996, Retrosheet used a small collection of individuals as well as the resources of teams and sportswriters to gather data, very slowly at first. The introduction of the website started a slow gathering of momentum that increased with each subsequent year. In 1996, the Web was still fairly nascent, even though tens of millions of people were using web browsers. Few people had access to more than slow dial-up connections, which made all but the simplest forms of media frustrating to download. Students in universities and researchers were, again, the earliest adopters of the new technology. And like their predecessors who used workplace mainframes and university computing resources for their baseball analysis, many university students weren’t always using the Web for class research.

By the end of the decade, Internet usage had roughly tripled during the period between 1995 and 2000, and would do so again during the following decade (see the Internet growth graph in Figure 1 at right). Along with the accelerated adoption came both problems and new applications. The latter would benefit baseball analysis greatly.

THE NEXT GENERATION WEB: 2001-2011

In 2000, Sean Forman launched the first of several websites that made up the Sports Reference collection, Baseball-Reference.com. Forman was a professor of mathematics and computer science at St. Joseph’s University at the time. Within six years, Baseball-Reference and its companion reference sites had become big business and Forman left his university job to run Sports Reference LLC full-time. At the same time, Retrosheet used its online outreach to gather and process box scores for nearly every year from 1911 on, and to provide additional detail wherever it is available. (Retrosheet also provides a venue for researchers to publish articles, although this is a secondary contribution for the website and project.)

In 2000, the so-called dot-com bubble burst. In the aftermath of that, however, the Web became even more widely available than it had been before. Cable service and DSL made fast Internet connections available to a large population. New applications, better tuned to users’ needs than failed online retail sites trying to sell pet food, sprang up. First was the search application, driven by Yahoo and then Google. Social network sites emerged, first slowly, and then like a firestorm. The Internet had moved to mobile devices by the end of the decade. Communications technology changed rapidly during this decade, progressing from 9600 baud modems, to 56K, to multi-megabit DSP and cable transmission, and now to wireless mobile phone networks. These advances in the available data rate to all users made access to rich media and massive amounts of data easy.

Widespread use and quick access, along with online databases spurred the development of such applications as UZR, Ultimate Zone Rating, the invention of Mitchel Lichtman. UZR uses data regarding the zone in which a batted ball lands, data that were previously not recorded by scorekeepers or Major League Baseball. Volunteers recorded such data and uploaded them. Without such data, Mitchel Lichtman would not have been able to develop his innovative approach. Today, game-time information can be kept on a tablet device and immediately uploaded to the appropriate database. However, with online applications such as MLB.com’s Gameday, and the development of MLBAM’s Fieldf/x system (in which the flight of every batted ball and the positioning of every fielder is digitized and recorded) even this approach will become obsolete.

If the PC era democratized analyzing baseball data, the Internet blog[fn]Short for weblog, originally a primitive way to keep an online diary.[/fn] democratized reporting and editor-ializing about nearly every aspect of baseball. It is difficult to determine the exact number of baseball blogs—they certainly run into the millions—but a large number have become popular with avid fans (and statheads like the author). Some are regional, some are devoted to teams. Some websites, such as FanGraphs, are dedicated to the analysis and reporting of the most esoteric of baseball stats like UZR and WAR. FanGraphs also publishes its own Power Ratings. Baseball Prospectus was founded in 1996 and grew out of a group of statistically minded friends who met through Usenet. Baseball Think Factory, Diamond Mind, Bleacher Report, and many other websites feed information to a number of amateur baseball historians and stats fanatics.

The other phenomenon that is likely to have a powerful effect on communicating among the community is social networking, with both social sites such as Facebook and professional sites like LinkedIn enabling people to connect one-to-one and in groups. This kind of online communication replaces the old mailing list and telephone tree with immediate contact and spread of information, allowing for easier harnessing of the group mind and faster propagation of new ideas and research.

THE IMPACT ON RESEARCHERS AND THE REST OF US

In 1971, there were few computer professionals. Most researchers into baseball stats used computers as an adjunct. Few universities offered degrees in information technology and a handful of companies employed the majority of IT professionals. That has all changed. There are more of us now who are trained in information technology—Sean Forman and Sean Lahman are good examples. But more than that, high school kids are now taught to program as part of their regular curriculum and even those who don’t program can easily create blogs and websites. Desktop applications like Microsoft Excel and Access can turn non-programmers into data processing mavens. Social sites and email allow us to stay in constant communication and exchange ideas. People may be unaware, or may have forgotten, that the World Wide Web was invented as a tool for sharing research, not selling ads or pet food. The web continues to be effective in that vein today.

The ability to analyze massive volumes of statistics has had a significant impact on ballclubs, as well. Many employ consultants to do statistical analysis as a way to better deploy players, to determine best trades, and to help in salary negotiations. Some, like the Boston Red Sox, have departments dedicated to information services. The Red Sox have employed James and Tippett as well as other SABR members, the Mariners Tom Tango, the Orioles Eddie Epstein, the Phillies and Braves Pete Palmer. Tango has also done work for the St. Louis Cardinals, along with Lichtman.

It goes beyond the researcher. Tablets and home computers provide a great platform for watching or listening to games, providing relevant stats and information live during streaming of game media. This author has observed his fellow fans Googling baseball data on their phones to settle arguments, or merely to keep up to date. We can all look forward to more leverage of our current and future computing environments for research, as well as to enhancing the experience of the ordinary fan.

When SABR was founded, computing was on the cusp of moving from an expensive tool for research and big business to a part of our everyday lives, a process that has paralleled the evolution of sabermetrics and of SABR as an organization. We can only expect more interesting perspectives of the game to emerge from advances in information technology, something to look forward to.

ACKNOWLEDGEMENTS

The author is indebted to the assistance of Dick Cramer, Gary Gillette, Pete Palmer, Mark Pankin, Tom Tango, as well as Sean Lahman and Sean Forman. They have helped recreate the history of sabermetrics, supplied the record of who was contributing when and what, and provided important data. The author would also like to thank the Computer Museum in Palo Alto for their work in maintaining the historical record of computing. Also, without such companies as Intel, Netscape, Microsoft, Google, and others, we would not have the rich computing environments that allow us to do the work we do today.

RICHARD SCHELL has been involved with computing for decades. At the time SABR was founded, he was finishing his BA in Mathematics and Computer Science, while working as a computer programmer affiliated with the PLATO Project at the University of Illinois. Since then, he has held numerous technical and senior management positions in the industry with companies such as Intel Corporation, Sun Microsystems, and Netscape Communications. Dr. Schell is currently a venture partner with ONSET Ventures. He is an avid baseball fan and a San Francisco Giants season-ticket holder. Dr. Schell has been a SABR member since 2007.