2021 SABR Analytics: Watch highlights from the MLB Statcast Player Pose Tracking and Visualization panel

At the SABR Virtual Analytics Conference on Sunday, March 14, 2021, a panel discussion was held on Player Pose Tracking and Visualization in the MLB Statcast system.

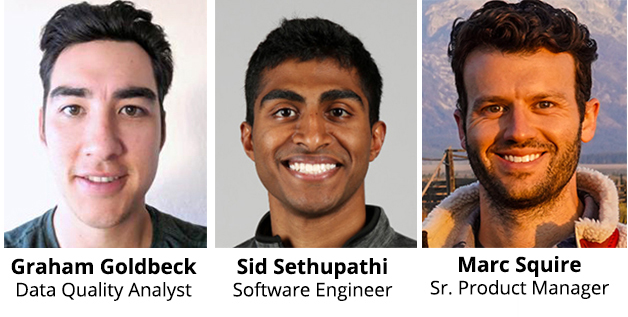

Panelists included Graham Goldbeck, Data Quality Analyst at Major League Baseball; Sid Sethupathi, MLB Software Engineer; and Marc Squire, MLB Senior Product Manager.

- Video: Click here to watch a replay of the MLB Statcast Player Pose Tracking and Visualization panel on YouTube

- Audio: Click here to listen to the MLB Statcast Player Pose Tracking and Visualization panel (MP3; 35:01)

Here are some highlights:

ON HOW LIMB TRACKING DATA IS CAPTURED

- Goldbeck: “Hawk-Eye’s tracking camera system is made up of 12 cameras. Five are focused primarily on pitch-to-plate tracking and seven on the players. But all cameras contribute to the limb tracking in some way. The video that is captured through these cameras is calibrated and run through computer revision that fits the model to the images, and then it ends up outputting an XYZ value and a timestamp. All this data comes across in near-real time, which is pretty amazing when you think about it. We used to need to hook people up to (motion capture) marker-based systems in a lab to generate anything resembling this amount of data. Think of the old videos you may have seen of players suiting up for MLB The Show and getting wired up to track all this information. Now we’re getting it pretty much live during actual games.”

ON 3-D VISUALIZATIONS

- Squire: “The 3-D visualizations use tracking data from 18 points on every player’s body to create a three-dimensional representation of actual plays from Major League Baseball games. The flexibility of the 3-D rendering means that fans will be able to re-watch some of MLB’s most exciting players from angles that broadcast cameras simply can’t capture. So, imagine you’re watching a sharply hit line drive down the third-base line but from the eyes of the third baseman, or you’re chasing down a fly ball from the center fielder’s perspective, or you’re standing in the batter’s box watching Clayton Kershaw’s curveball as it’s coming in. In the future, this product will continually look for ways to leverage this next-gen technology to tell the story of baseball in compelling ways that help create deeper bonds between fans and the game.”

ON 3-D BENEFITS BEYOND FAN ENGAGEMENT

- Squire: “We think these visualizations provide new ways for coaches to actually break down plays for their players. Whether you’re a Little League coach, a high school coach, or potentially even in the majors, some of these drone cam views that we’ve shown can really show how players move around the field both in direct and indirect reaction to where the ball is hit. … So we’re excited about the potential applications to teach and coach as well through this sort of tool.”

ON MAPPING PLAYER MOVEMENT

- Sethupathi: “One of the challenges that we faced in doing this animation project was, how do we map player movement from the discrete data points that we have access to? As Graham showed, we have 18 points on the players and in those earlier prototypes, the way we were visualizing a player was drawing joints in between these track points. You get a rough silhouette of the player, but it’s not really that humanoid figure that we’re shooting for. From the right side what we’re trying to get to is more of an animation model that’s driven by an armature and that armature is sort of a rigid structure. So our challenge is, how do we get from our track data points into driving this armature?”

ON INVERSE KINEMATICS

- Sethupathi: “We start out with bones and tree and we traverse through that tree. And at first we’ll take our tracking vector, which is the bone representing our tracking data. Imagine our left shoulder, and it would be our elbow and our shoulder joints. Elbow would be the end, shoulder would be the start. We find that vector in our tracking data. We normalize it to get the unit vector, and then move on to the actual rig bone and do the same process. … In our rig, we find the elbow and then the shoulder, calculate that vector and normalize it to the unit vector. And now the next step is how do we find the rotation to get from our rig vector to our tracking vector. We use quaternions. … From an applied math perspective, it’s very useful for describing rotations because it does a lot of things. It’s very useful for computer interpretations of rotations … we’ll use our quaternion library and basically find the rotation that takes us from the rigs tracked location to our tracked data location. And once we have that rotation, we set the rotation on the bone, and then we traverse to the next bone itself.”

ON THE PURPOSE FOR TRACKING UMPIRES

- Goldbeck: “The way that tracking system works is it tracks whatever it sees in the field, and then we assign who that person is afterwards. So, the umpires are kind of a bonus side effect of that. But they also are part of the scene. As you can see, if we look at 3-D visuals like the umpire being there, their reactions to stuff I think is pretty valuable information.”

To learn more, visit SABR.org/analytics.

Transcription assistance from Alex Weiner.

Originally published: June 1, 2021. Last Updated: March 23, 2021.